The trustworthiness of a web page might help it rise up Google’s rankings if the search giant starts to measure quality by facts, not just links – New Scientist.

New sites and web organizations experience issues getting on the first page of Google’s search. They have to streamline searches, compose websites, promote themselves, and take into consideration how their clients works. In any case, as indicated by a report from the magazine New Scientist, Google has made an Algorithm that will rank sites based on their “dependability” by identifying and characterizing false certainties on every site page.

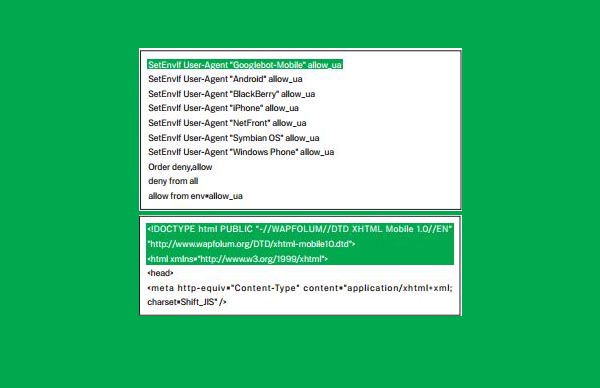

Image Source: Google User Content – Content for typical website search engine optimization

I like the thought, however we need to proceed with caution. Google needs to rank sites based on how dependable their claims are and not how dependable GOOGLE THINKS their claims are.

Google without a doubt is a foundation of the web, which itself is presently great work on its own in our human advancement. We are rapidly advancing to a point where we have an overall system of shared human learning and correspondence. The web is currently the prevailing medium of human thoughts and ideas.

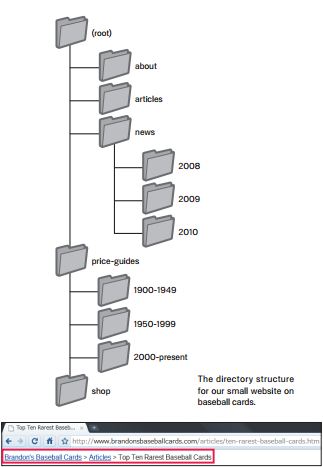

Google is not only an internet searcher – it is the overwhelming entryway of this data. This makes the Google ranking system an imperative measurement for any site. Actually, there is a whole industry, website improvement; committed to enhancing ones Google positioning it is called Search Engine Optimization (SEO).

Google’s hugest advancement was to rank sites by the number and nature of incoming links. It has become a valuable intermediary, and serves to remunerate clients with a supportive positioning of the sites they are hunting down.

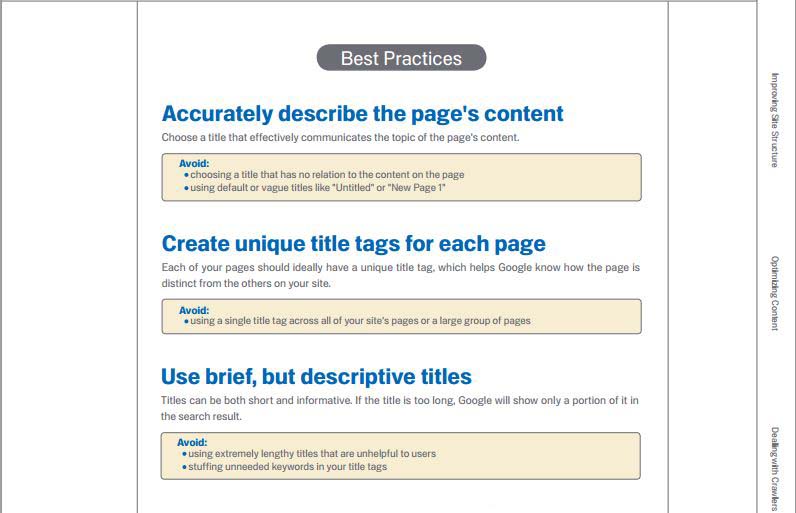

It is not simple to go against the framework. You cannot improve your Google rank just by rehashing search terms in the code. Truth be told, several people who work at Google have posted on many blogs their methods of tweaking your site to make SEO better. SEO is an endeavor to divert Google’s positioning system, and Google does not like that. They want the truly most important and relevant websites to move to the top.

The current issue with Google’s positioning system is that it ranks your website on the basis of its popularity. While this works for some sorts of data, it is tricky with particularly unpopular subjects, for example, health services.

Xin Luna Dong, Evgeniy Gabrilovich, Kevin Murphy, Van Dang, Wilko Horn, Camillo Lugaresi, Shaohua Sun and Wei Zhang from University of Cornell’s computer science department submitted a report in which they state that the quality of web sources has been traditionally evaluated using exogenous signals such as the hyperlink structure of the graph.

“We propose a new approach that relies on endogenous signals, namely, the correctness of factual information provided by the source. A source that has few false facts is considered to be trustworthy. The facts are automatically extracted from each source by information extraction methods commonly used to construct knowledge bases. We propose a way to distinguish errors made in the extraction process from factual errors in the web source per se, by using joint inference in a novel multi-layer probabilistic model. We call the trustworthiness score we computed Knowledge-Based Trust (KBT). On synthetic data, we show that our method can reliably compute the true trustworthiness levels of the sources. We then apply it to a database of 2.8B facts extracted from the web, and thereby estimate the trustworthiness of 119M web pages. Manual evaluation of a subset of the results confirms the effectiveness of the method.”

However, it is irritating that Google changes the positioning of sites as indicated by our individual history. This is intended to tailor information for the user, and may be valuable when a person is scanning for a thing they need to purchase. On the off chance that you need to see a scholarly article, every one of them will show up on a single page. This gimmick can be turned off, however it is “on” as default, and numerous individuals don’t understand how it affects their searches.

The software works by tapping into the Knowledge Vault, the vast store of facts that Google has pulled off the internet. Facts the web unanimously agrees on are considered a reasonable proxy for truth. Web pages that contain contradictory information are bumped down the rankings – New Scientist.

The fact that Google is not sitting there and enjoying its peak is good. Their new idea is very interesting, and by looking at the factual statements on a website and identifying if they are accurate by comparing them to a database of knowledge, it is defiantly going to start a new trend in the SEO market.

Sources: