Almost anyone who has been online in the last few days has probably noticed a sudden increase in the number of people uploading anime-style self-portraits.

Their creator, a photo-editing tool called Lensa AI, has dubbed them “Magic Avatars,” and they’ve taken over social media. Their popularity has grown in lockstep with ChatGPT, OpenAI’s next-generation Artificial Intelligence program chat-bot.

AI programs have had a banner year. With another called Midjourney taking the internet by storm, digital designers all over the world are worried they’ll be out of work soon. Text-to-image generators, the most visible of which are OpenAI’s DALL-E and Midjourney’s Stable Diffusion, have caused havoc in the creative industry.

According to Futurism, a recording company presented an artificially intelligent rapper, but they quickly dismantled it. According to Futurism, machine learning was even used to generate full-fledged conversations with both living and deceased celebrities.

You could say AI has made significant strides recently, and let us not forget the Google engineer who was recently suspended after going public with his belief that they had created a sentient chat bot called LaMDA.

For years, experts have been working on the underlying technology, but recent advances, combined with significant financial investment, have sparked a mad dash to market. This has resulted in an explosion of consumer goods that incorporate cutting-edge technologies.

The only problem is that neither the goods nor the customers are ready.

For example, consider those “Magic Avatars” who appear harmless at first glance. After all, there’s nothing wrong with letting the software turn you into a vibrant avatar. In contrast to text-to-image converters, you are limited to the images you currently own.

Artists began raising concerns as soon as the “avatars” went viral, claiming that Lensa had no safeguards in place to protect the artists whose work may have been used to train the computer. On the negative side, despite Lensa’s “zero nakedness” usage policy, users found it surprisingly easy to take nude photos of themselves and anyone else they had photos of.

“The ease with which you can create images of anyone you can imagine (or, at the very least, anyone you have a handful of photos of) is terrifying,” writes Techcrunch’s Haje Jan Kamps.

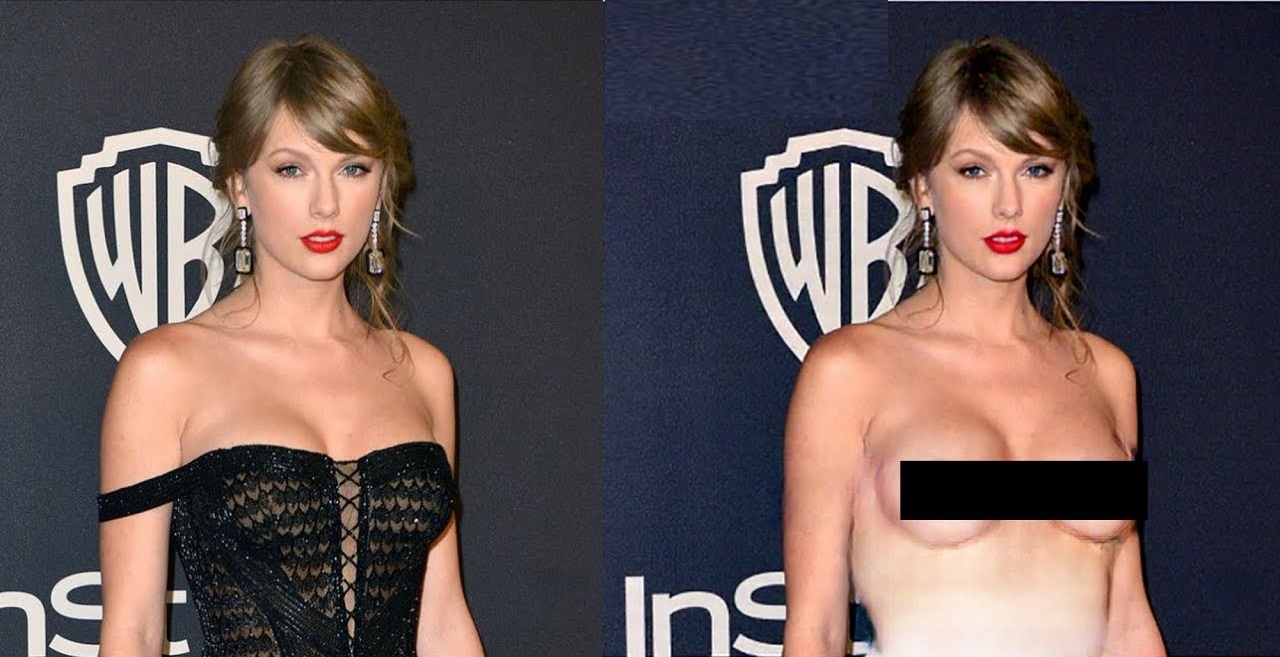

Kamps tested the app’s pornographic creation capabilities by presenting it with poorly altered photographs of celebrity faces superimposed on naked people. Unfortunately for him, the manipulated photographs easily evaded all of the app’s ostensibly protective features.

“Add NSFW content into the mix, and we’re quickly careening into some pretty murky territory: your friends or some random person you met in a bar and exchanged Facebook friend status with may not have consented to someone generating soft-core porn of them,” he said.

That’s terrible, but it gets much worse. Despite Lensa’s claims that it prevents users from making child pornography, journalist Olivia Snow discovered this horrifying possibility after uploading photos of herself as a child to the app’s “Magic Avatars” feature. “I pieced together the required 10 photos to run the app and waited to see how it transformed me from awkward six-year-old to fairy princess,” she wrote in Wired. “The outcomes were horrifying.”

“The end result was fully-nude photos of an adolescent and sometimes childlike face but a distinctly adult body,” she explained. “This set elicited a certain coyness: a bare back, tousled hair, and an avatar with my childlike face holding a leaf between her naked adult breasts.”

The stories told by Kamps and Snow both highlight a frustrating aspect of today’s AI technology: it frequently behaves in ways that its designers did not anticipate, and it can even circumvent the safeguards put in place to prevent this. It suggests that the AI industry is moving faster than society and even their own technology can keep up with. This is extremely concerning, particularly in light of the findings.

Lensa told Techcrunch that users are solely responsible for any sexual content found in the app. Many people in business believe that bad actors will always exist and will continue to be terrible actors. Another common argument is that a skilled Photoshop user could create anything produced by such applications just as easily. They claim that any explicit or pornographic images are the “result of deliberate misconduct on the app.”

Both of these points of view have some validity. However, none of this changes the fact that Lensa’s software, like many others of its kind, makes it much easier for evil actors to accomplish what they could otherwise do. For the first time, anyone with access to the right algorithm can produce convincing fake nudity or high-quality renderings of child sexual abuse material.

The metaphor of Pandora’s box opening is also apparent. The Lensas of the world can try all they want to patent their technology, but copycat algorithms that circumvent the safeguards will still be developed. It will occur. It is a foregone conclusion.

Since Lensa’s debacle, there has been a growing awareness of the potential for actual people to suffer real and severe harm as a result of the premature adoption of AI technologies, such as image generators. Meanwhile, the sector appears to be adopting a full-throttle approach, with a focus on outpacing competitors for venture capital investment rather than ensuring the tools are adequately secure.

Keep in mind that nonconsensual porn is just one of many potential hazards in this situation. Another major source of concern is the ease with which political disinformation can be spread. What about automatic text creation software? Teachers are terrified at the prospect of this happening.

While AI may make our lives easier, it will undoubtedly cause many problems in the future. Where does it end when soon it will be entirely possible to fake an entire speech from a politician?

While it is likely that we will be able to bring dead actors back to life for the big screen, will you be able to trust anything digitally that you see without it being directly in front of you? My guess is that it does not.

Even a seemingly innocuous tool like “Magic Avatars” serves as a reminder that AI is still an experiment, even as it profoundly alters our reality, and that collateral damage is not a foreseen risk. This is undeniably true.

Buckle up, people.